Google Drive Deletes Files If It Considers Their Content to Be "Inappropriate" (Although Legal)

Google Drive Deletes Files If It Considers Their Content to Be "Inappropriate" (Although Legal)

The Mountain View company announced they would wait two weeks (by the end of December or at the beginning of January) before starting scanning the content of the files of their users hosted on the cloud and therefore detect "inappropriate" files according to their point of view, which does not necessarily mean they are ilegal.

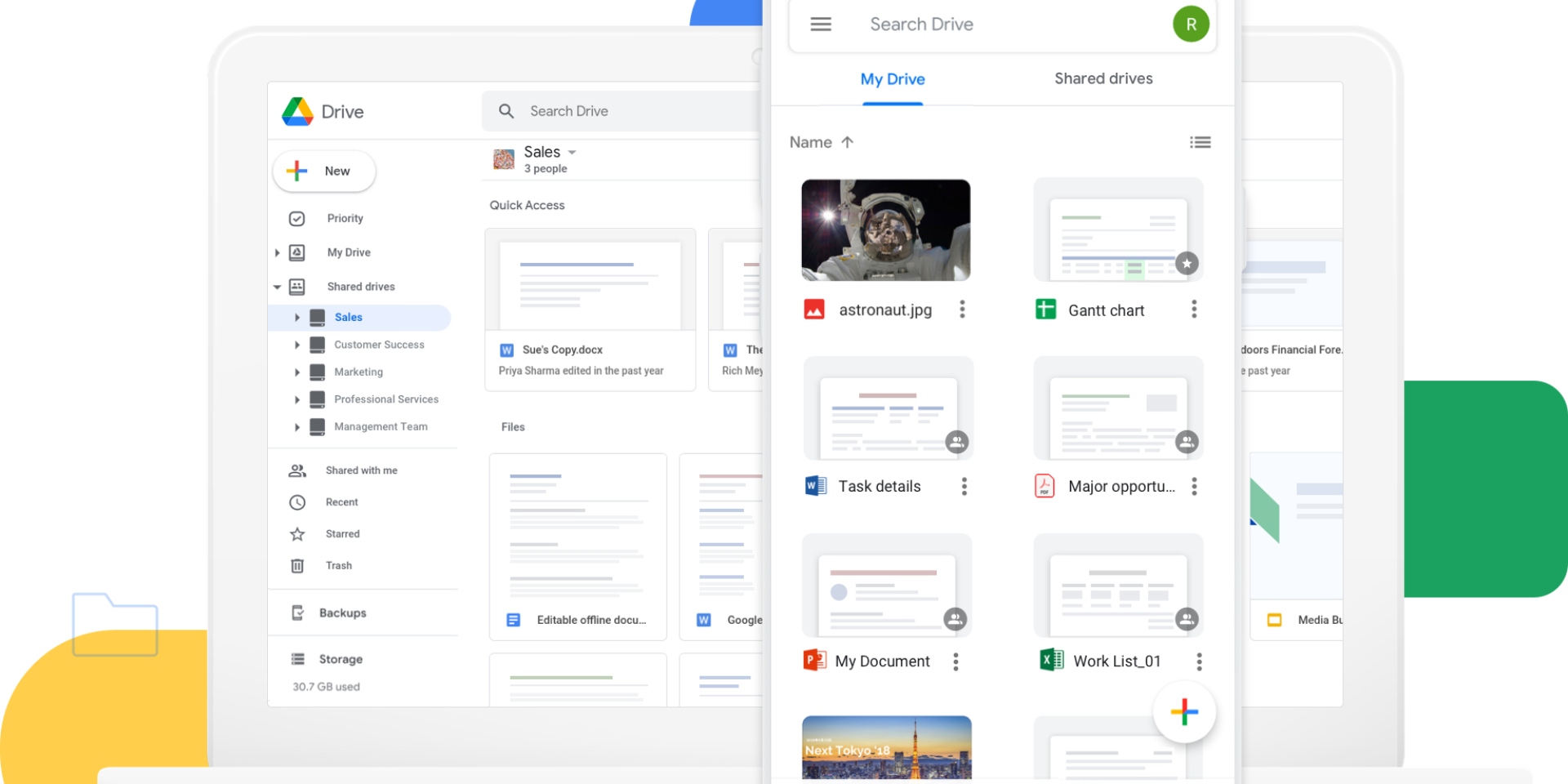

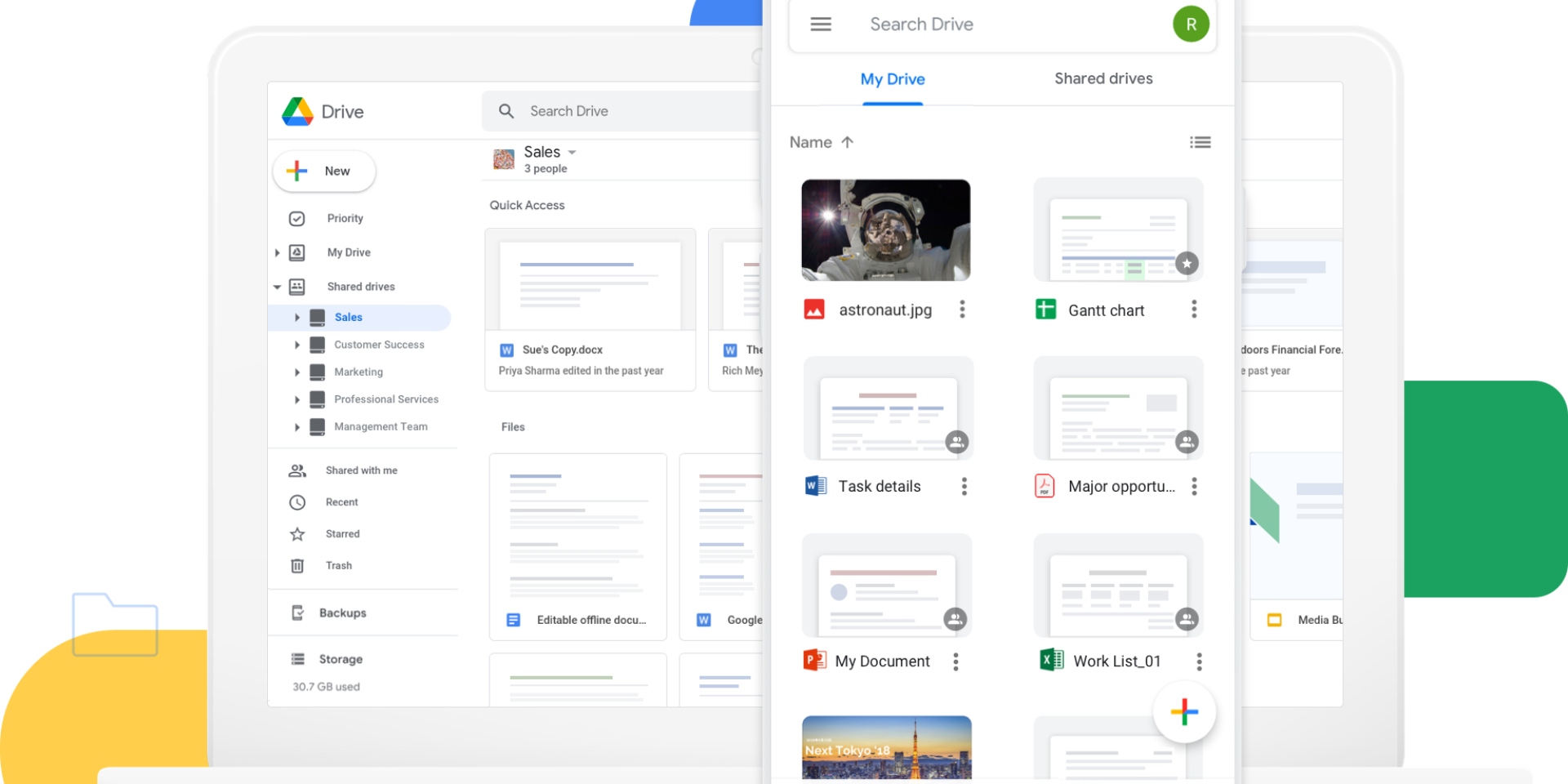

By mid-December, the IT titan Google, located at Mountain View, announced the implementation of new terms of use for their cloud storage service, Google Drive. These changes grant Google the right to ban some files that violate the policies of their company.

Just like that, Google claimed they would wait two weeks (until the end of December or the beginning of January) before starting scanning the content of the files of their users stored in the Cloud and therefore detect "inapropriate" files according to their point of view, which do not necessarily have to be ilegal.

“It is possible we may check the content to determine whether it is legal or it violates our Program Policies, so we may delete it or refuse to publish it if we have enough basis to consider it breaks our policies or the Law.”

Therefore in the coming days we can already certainly say that there are users who are being subjected to the protocol for revising hosted files.

An analysis open to arbitrariness

Along the analysis process, once Google automated systems dectect a possible violation of their policies, experts must check the suspicious content and decide on the measures to be taken, such as for instance restricting access to third parties (preventing the user from sharing the inappropriate file online), deleting the file itself or directly banning the user from all services this IT giant provides.

Google has indicated that the goal they intend to achieve through these measures is avoiding hosting malware, sexually explicit documents, discourses that promote hate and content that comprises a threat for minors.

However, there has already been lots of criticism to these new policies, since Google has not clearly defined which content is considered to be "inappropriate" according to their standards.

This lack of definition has generated as a consequence fear among the platform's users, since they understand there is the possibility that Google prevents them from accessing their own files on an arbitrary basis.

As a way of example, would intimate pictures of the user be considered inappropriate? Or are those pictures protected by the exceptions listed by Google defined as "based on artistic, educative, documentary or scientific consideration"?

This lack of definition has generated as a consequence fear among the platform's users since they understant that there is the possibility that Google prevents them from accessing their own files on an arbitrary basis.

However, Google has pointed out that they have created a procedure to request a revision of this type of decisions, but for now it has not specified what the procedure will be like or its duration.

The Digital Service Act (DSA) follows Google's policy

The new policy adopted by Google, will surely be adapted by more companies. Since the European Parliament is in discussions on the Digital Service Act, which has been by now 2 years in parliamentary process and its text will surely see the light this year.

Under the premise that what is not legal offline, should not be legal online ethier, the main goal of this European Act is to harmonize and update regulations regarding responsibilities and obligations of digital service providers, and particularly of online platforms, with the purpose of creating a safe online environment as well as predictable, trustworthy and trasparent, where individual fundamental rights are protected and reinforced, while more responsibility is required from those digital services.

That way, the Digital Service Act, intends to give power to digital platforms to suppress or block the contents considered “non-adequate”, even if they are not ilegal.

In our opinion, this right to supress or block files that will be employed by the digital platforms that host content will become a headache, since in many cases it could clash with fundamental rights such as people's privacy or freedom of expression. It makes it an extremely complex task that of callibrating digital platform arbirary actions.